The name monophreak came about due to hearing from one ear and all the the hacky (not quite telephone lines but signals and frequency) ways in which I have tried to make something sound decent in stereo. I will post a blog article about how I mix with mono hearing, there are a surprisingly large amount of brilliant visual aids that you can use to work out time and space.

I have borrowed a BAHA5 on a band. Being into audio, I couldn’t wait to see if I could finally get to a stage where I can listen to music and mix in stereo. It’s been an interesting week and thought I would capture the highs, lows and highs again, of the process. If you attempt anything in this article, always ensure volume is off before you start and raise gradually so that you do not risk your hearing. You also need to ensure you are using hardware and software that is stable to prevent risk to your hearing. Everything you do will be at your own risk, I am providing this for educational purposes only.

How everything unfolded…

Equipped with my BAHA5, the first thing I wanted to do was listen to music in stereo for the first time in decades. Er, no. Immediate fail. I thought it was going to be as simple as attaching my BAHA to the iPhone through Made for iPhone (MfI), an AirPod and away I go. From what I gather, single sided hearing loss is more tricky because various bluetooth devices don’t align or connect in the same way due to various protocol differences. Strangely, I thought listening to something in stereo would be one of the first things most people would want to do and I was amazed at how difficult this is. After trying numerous apps, I gave up and still haven’t found a reasonable solution for my iPhone. Next up, my Mac. I am very proficient in Pro Audio software so thought I would build an amalgamated audio device. An amalgamated device is where you take two devices and combine them into one, so that the same sounds play out of the two devices at the same time. Fail. My BAHA5 would not connect to my Mac Mini over Bluetooth due to the type of bluetooth technology used. A bit of time researching, a kind eBayer and the purchase of a Cochlear Phone Clip, I had an ‘Aggregated Music Device’ on my Mac, ready to listen to stereo. Fail.

The Cochlear Phone Clip worked as a fantastic intermediary between the hearing aid and my Mac but the latency between them both made the Aggregated Music device (created via the Mac’s Audio Midi Setup) unworkable, even with the latency drift options enabled. After a bit more research, it looks like the word clock timing (or in other words – synchronisation) is not stable over bluetooth.

Determined not to give up, I thought about as many crazy ways in which I could synchronise this latency and looked at a range of stereo delay plugins and apps. Absolute pain.

Then I stumbled on a magic piece of software called Audio HiJack and I have ever since, been listening in stereo with some limited mixing. It has been brilliant.

The Setup

Audio HiJack allows you to take control of the routing of audio from a Mac. Pretty much as the name describes. It is made by a company called Rogue Amoeba. The key reason why this has been brilliant for a hearing aid such as the BAHA, is that you can add in a control for latency enabling you to align the left/right stereo channels.

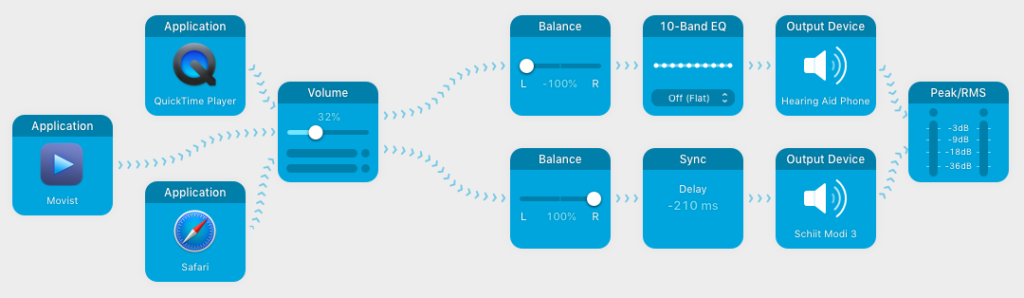

I set up Audio HiJack as follows:

On the left, I added applications I tend to use for my day-to-day music and media listening under the Application object. I then dragged and dropped the volume setting into the chain so that I could have some control over preventing sound from blasting my hearing aid. I dropped this to 0% at first so that I did not blast my ears while configuring. I then found 32% to be comfortable with my setup.

From that point, I split the left and right signals. I dragged and dropped two Balance blocks into the chain and moved the signal to either 100% left or 100% right. On the top chain, I added a 10-Band EQ to refine signals from the audio source and set the Output Device to Hearing Aid Phone. Inside here, I dropped the output to from around 17% to 30% (I’m still getting used to hearing so this changes as I adapt).

On the right signal flow, I added the all important sync block, my audio out (headphones attached to a Schiit pre-amp on the Mac Pro) and routed both left and right into the Peak/RMS block to check that sound was flowing through correctly.

After everything was configured, I clicked run (bottom left) and then headed to YouTube to listen to the classic Drifting Away by Faithless. I started off by making sure the YouTube volume slider was set to 0% and then carefully raised the volume.

It sounded messy and I was not sure this would work. I gradually increased the Sync block ms settings until the channels merged and it was spectacular. Something strange happened when the two signals collided and it is hard to describe. A sense of space, probably from the stereo imaging, takes over and my brain could fill in some missing blanks. My conclusion was that this could be due to how many tracks have a core mono placement of kick drum, bass and vocals in a central column with other elements to the side. Being as hearing aids focus on speech, the impact of hearing music with singing is particularly special.

For my AirPods, I built a similar setup and found that 70ms latency tended to be a good starting point but this could change (typically by 10ms) pending on how many browser windows I had open or the software I was using. A headphone plugged into the audio in jacks/usb preamp for my good ear was a lot more stable. Interestingly, I am pretty certain that I had to toggle the volume output of the hearing aid slightly as the batteries neared their end of life but I could be imagining this as I am still acclimatising to have some hearing.

In relation to mixing in Logic Pro X, my setup looks like the following:

and I am going to follow up this post with a dedicated article on my first mix. I was expecting a disaster but ended up with a surprisingly solid mix, mainly because of how I could hear missing transients in the hearing aid.